Silicon revolution: the epic journey of computer processors and what lies ahead

The digital revolution began with enormous, clunky computers in the 1940s and has transformed entirely with the introduction of modern day pocket sized devices. Computer processors operated and guided the digital transformation. Everything from smartphones and gaming consoles to supercomputers incorporates processors, which makes them the core component.

The true question is, how did we manage to develop from vacuum tubes to billions of integrated transistors? What new, startling inventions are transforming the modern day world? In this piece, we take a closer look at the astonishing progression in processor technology. So, sit back because this trip through Silicon history is nothing less than amazing.

The Origins of Computer Processors

In 1971, Intel introduced the world to the first microprocessor, the Intel 4004, which was a groundbreaking 4-bit processor that was able to perform 60,000 operations each second. At the time, this was an extraordinary accomplishment. It operated at a clock speed of 740 kHz and was built with only 2,300 transistors, each placed on a 10-micrometer process node.

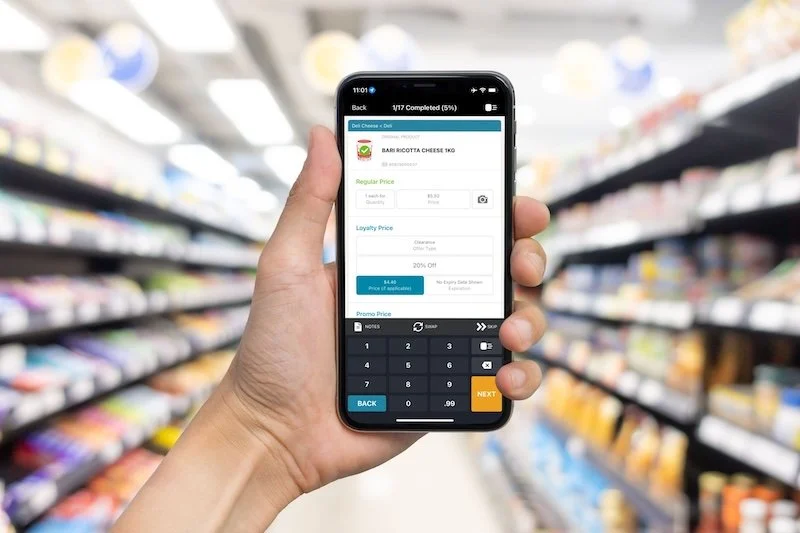

The tiny chip would go on to shift the world of technology forever. After all, it was from it, in the process of evolution, that the processor appeared, which is now installed in your smartphone, from which you can read this article. But that's not all. Using the MelBet download, you can place bets on sports directly from your smartphone, and all this is done in a convenient application format. It is available on both Android and iOS and thanks to good optimisation, the application will work even on older devices.

But let's get back to the processors. After the first microprocessor, we did not have to wait long for a new version to appear. Around three years later, in 1974, Intel increased the speed and power of their processors with the release of the 8-bit 8080. This processor was capable of a 2 MHz clock speed, powered iconic personal computers such as the Altair 8800, and was built with 6,000 transistors.

This was around the same time that Motorola entered the game with their new 6800 series, which had memory addressing capabilities of 4,096 bytes. These new and improved processors caused a shift in technology, shrinking the size of room sized mainframes to personal computers and marking the beginning of the microprocessor era.

The Evolution from Single-Core to Multi-Core CPUs

With the growth of software in the late 1990s and the increasing need for multitasking, single-core processors found it very difficult to keep up with the demand for speed. The introduction of multi-core processors was the solution, as they were able to run many tasks simultaneously without affecting performance. Let us explore the path this innovation took:

● 2001: IBM POWER4 – This was the first commercially available dual-core processor, working at 1.1 GHz and having over 170 million transistors. It transformed the performance of servers and set a new standard for enterprise computing.

● 2005: AMD Athlon 64 X2 – This model extended dual-core technology to consumer desktops. It offered speeds of up to 2.4 GHz and completely changed home computing for gamers and multitaskers.

● 2006: Intel Core 2 Duo – This model was able to increase efficiency and power with a 65 nm process. It single-handedly dominated the market by consuming 40% less energy than its predecessors while delivering 40% more performance.

● 2017: AMD Ryzen Threadripper – This was a large leap in high-performance computing by offering 16 cores and 32 threads, clocked at 3.4 GHz. This was popular among professionals, gamers, and other creators.

Now, CPUs with 8, 12, and even 64 cores are common, and AI applications, gaming, and professional software have drastically improved in speed and efficiency. Multi-core technology today forms the backbone of computing and continues to drive innovation in industries like entertainment and scientific research.

After all, processors are needed for all operations, even for the authors to post the latest news from the world of sports on Facebook MelBet also needs a computer or smartphone, which is guaranteed to have a multi-core processor. So, next time you read sports news or memes, think about the fact that without a processor, none of this would exist.

How Moore’s Law Shaped Processor Development

Gordon Moore, Intel's co-founder, was confident in his prediction in 1965 that the number of transistors on one chip would double every two years. This theory is known as Moore's Law. It drove the development of the semiconductor industry. The Intel 4004 from 1971 was remarkable for having 2,300 transistors, and by the time the Pentium processor was released in 1993, which had 3.1 million transistors, multiple predecessors had been released. The Intel 80286 in 1982 had 134,000 transistors, achieving 1 MHz clock speeds.

Apple launched the M1 in 2020, boasting a staggering 16 billion transistors at a 5 nm die size, continuing the trend of increased performance and efficiency. The market also experienced exponential improvements in performance, going from kilohertz to gigahertz clock speeds.

These changes, paired with drastic drops in cost, allowed affordable computing to become accessible to more people. Though there are physical limitations to these improvements now, advancements like TSMC and Samsung’s 3 nm and experimental 2 nm process nodes demonstrate that the industry still has life.

The Rise of GPUs and Specialised Processors

Having started with rendering graphics, the field of graphics processing units (GPUs) has dramatically shifted to one of computation. The launch of NVIDIA’s CUDA technology back in 2006 enabled developers to use graphical processors for scientific research, AI, and machine learning tasks. Current iterations of GPUs, such as the NVIDIA RTX 4090, far exceed their predecessors with 24 GB of GDDR6X memory and 16,000 CUDA cores. These extreme specifications make them indispensable for data-heavy work.

The evolution of AI also gave birth to specialised processors such as Google’s Tensor Processing Units (TPUs). When introduced in 2015, these units were custom-made for AI workloads and delivered up to 420 teraflops. Apple also has its own Neural Engine within the M1 chip, which augments machine learning capabilities by utilizing 11 trillion operations each second. These advancements have diversified beyond traditional CPUs, transforming the industry from self-driving cars to personal healthcare.

Energy Efficiency in Modern Processor Design

Due to growing concern about environmental issues as well as the use of mobile devices, energy efficiency has become a critical focus in processor design. Today, performance per watt is the most important metric for modern chips, which means efficiency has to be obtained without compromising speed.

As the table indicates, while there have been tremendous increases in transistor count, there has similarly been a much more powerful improvement in power consumed per operation due to improved architecture and process nodes. By pioneering energy efficient technology with the M1 CPU, Apple is making the world of electronics more sustainable.

The Role of AI in Processor Innovation

The way machines are operated and designed has completely altered due to artificial intelligence. Today, AI accelerators and machine learning algorithms have been incorporated directly into the processor architecture. This optimises everything from power usage to task allocation.

NVIDIA is aiding in the growth of artificial intelligence by using AI algorithms to improve GPU architecture, enabling gaming with hyper-realistic graphics and real-time ray tracing. Apple's M1 chip is another predominant example. It has a Neural Engine with 16 cores that can perform 11 trillion operations a second.

This integration greatly improves tasks like image processing, voice recognition, and real-time translation. Apple's TPUs have also accelerated AI computations, enabling more sophisticated tasks such as lower power consumption and better heat distribution.

Challenges in Processor Miniaturisation

As we begin working with smaller nanometer scales, new problems arise. Reducing the size of devices doesn’t just require making them smaller. There are several physical and technical challenges that have the potential to slow down progress, such as:

Heat Dissipation: Small transistors produce a significant amount of heat in tight spaces, making cooling options incredibly important.

Quantum Tunneling: Nanoscale levels of transistors enable electrons to exit an insulator electronically, resulting in leakage that lowers performance.

Manufacturing Costs: Production expenses tremendously increase with the implementation of EUV lithography and other advanced fabrication techniques.

Material Limitations: Processors are finding it increasingly difficult to work with silicon, which has served as the foundation for decades, marking the need for materials like carbon nanotubes and graphene.

In order to address these problems, many continue to innovate in the industry using 3D chip stacking, new semiconductors, heterogeneous computing, and other materials.

Quantum Leaps and Light-Speed Futures: The Next Frontier in Processors

With quantum and optical technologies on the horizon, the future of processors could be jaw-dropping as they may change the definition of computing. Google’s Sycamore and IBM’s Eagle quantum processors use qubits to compute problems that classical computers would take millennia to solve. With the processing capabilities afforded by these technologies, optics could replace electricity for processors, leading to unimaginable speed with no wasted heat.

Indeed, we are on the eve of a revolution, and when these technologies reach maturity, there will be no bounds to the ability to compute. The journey of computers and their capabilities has only begun, and the sky is the limit.

Continue reading…